Peer review means that experts from different fields or disciplines evaluate the quality of research, & assessments. It helps in enhancing the quality of the assessments or research so that students are evaluated on true understanding rather than interpretation. Let's understand why professors are choosing peer review assessment design.

Designing questions in education has been private work for years. This is an unspoken truth because a teacher or professor designs the questions, finalizes the assessment, and moves on to the next responsibility. This model worked when assessments were static and classrooms were small. It no longer fits the reality of modern education. As the curriculum rapidly changes, diverse learning needs are constantly emerging, there is a strong emphasis on outcome-based evaluation, and technology has become an integral part of modern education.

Current educators and professors must have the expertise to create and develop AI assessments using platforms like PrepAI. It allows teachers to access an array of skills (including critical thinking ability) in less time and complete the tasks within a given period using AI. The use of collaborative technology and academic insights is now critical to ensure quality design of assessments. Working alone only creates uncertainty about quality assessment design, whereas working collaboratively increases confidence in creating assessment quality improvements that truly reflect both knowledge and ability level. Also, recent research and institutional practices show that peer-reviewed assessment design leads to higher clarity, fairness, and student performance, especially when combined with AI-based assessment tools.

The category of attaining student attention and engagement grew by 28.8 percent with the help of AI, while the category of students demonstrating passion and motivation climbed by 15.27 percent. Across institutions and disciplines, more educators are beginning to open their assessment and question design process to peer review, not as a form of scrutiny, but as a way to improve clarity, fairness, and learning outcomes. This shift is not about giving up academic authority. It is about strengthening assessment quality through shared expertise, supported by AI-based assessment tools and collaborative platforms like the PrepAI Community.

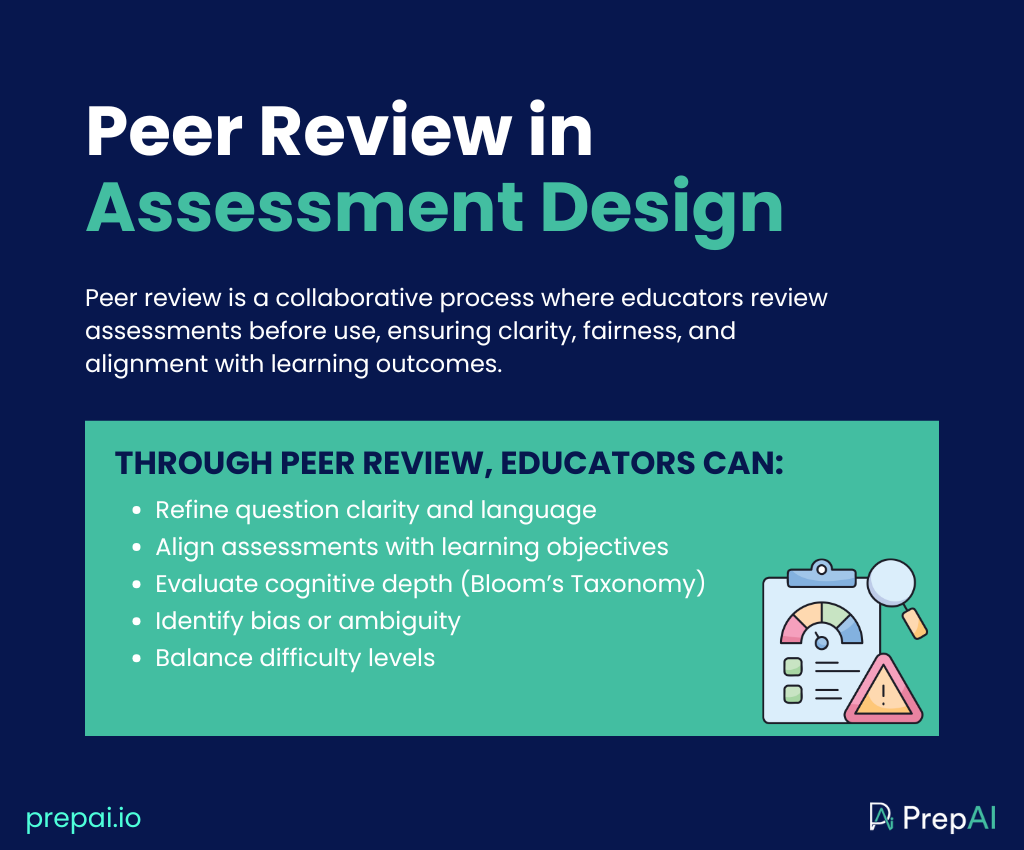

What is Peer Review in Assessment Design?

Peer review means when educators or professors draft questions, rubrics, or assessments with the help of each other. Peer review in assessments is necessary because it offers feedback before the assessments are administered to the students. With the help of peer review in assessments, educators can:

- Reviewing question clarity and language

- Checking alignment with learning outcomes

- Evaluating cognitive depth using Bloom’s Taxonomy

- Identifying bias or ambiguity

- Validating difficulty balance

According to the UK Quality Assurance Agency (QAA), peer review improves the transparency, reliability, and fairness of assessments by ensuring that learning outcomes are not individual interpretations but are driven through evaluation decisions.

Moreover, through peer assessment, assessing students’ work becomes easy and effective. It uses predetermined criteria, which provide opportunities for the development of critical thinking, self-assessment, and understanding of course materials. Peer assessment encourages greater student ownership of their own learning and promotes the development of analytical and communication skills. In addition to providing opportunities for formative learning, peer assessment may also decrease the amount of time spent on grading by educators.

Why Are Professors Shifting to Peer Review in Assessment Design?

Professors and educators are shifting to modern assessment for assessment quality improvement, which is far more complex than it was a decade ago. Educators are now expected to:

- design skill-based and outcome-oriented questions

- avoid rote-memory evaluation

- accommodate diverse learning abilities

- ensure fairness across cohorts

- defend assessment choices during audits or reviews

This increases their workload, and they are expected to make more assessments within shorter periods to make students prepare their lessons well. Educators or professors already have a lot of work, like teaching, mentoring, research, and more; designing assessments alone does not lead to quality assessments, resulting in uncertainty, rework, and fatigue.

To avoid this pain, they need to rethink how assessments were used to design. For question design in assessment, professors and educators can use AI question generators for teachers to draft questions, generate variations, align difficulty levels, and structure papers faster. PrepAI helps reduce the time spent on repetitive tasks so educators can focus on academic judgment rather than formatting and redrafting.

Once assessments are created, professors still need confidence that the questions are clear, fair, and aligned with learning outcomes. This is why peer review has become a critical next step. By becoming part of the PrepAI Community, professors and teachers can discuss their questions, clear doubts, seek feedback on difficulty levels or rubrics, and learn how others are approaching similar assessment challenges.

Together, PrepAI and the PrepAI Community support a complete workflow:

- PrepAI helps educators create assessments faster and more effectively.

- PrepAI Community enables peer review, discussion, and shared learning to improve assessment quality.

By relying on this shift, educators move from working in isolation to working with clarity and support, which helps them follow education best practices without losing ownership of their assessments. If you are an educator looking to simplify assessment creation, improve quality through peer review, and learn from others facing the same challenges, you can start by joining the community today.

How Assessment Quality Improved Through Discussion?

The quality of assessments tends not to improve in isolation but rather to improve when academic discussions occur about all the ideas that are tested, questioned, and refined through peer review in assessment design. Research published in Assessment and Evaluation in Higher Education says assessments that have been reviewed by other academics consistently show the following in their assessments:

- Higher construct validity

- Fewer technical and conceptual errors

- Clearer alignment with intended learning outcomes

PrepAI, an AI assessment platform, plays a supporting role. PrepAI helps educators generate and structure assessments efficiently using AI to draft questions, create variations, and align difficulty levels. However, the real improvement in quality happens when AI for education and assessments is discussed and refined through peer review. Our platform is being used by many universities and institutions, through which you can connect with multiple experts through the PrepAI community.

If your university is not using our AI assessment platform, educators and professors can still build their profile on the PrepAI community. Once the profile is built, professors and teachers can share drafts, ask questions, clear doubts, and receive feedback from peers who understand the realities of teaching and evaluation. This combination of AI efficiency and human discussion ensures that assessments are not only created faster but also improved thoughtfully.

This constant discussion on the PrepAI community also contributes to the professional identity of the educators and professors. In many institutions, educators’ deep work on assessment remains invisible, stored in folders, shared privately, or evaluated internally. But it rarely becomes part of an educator’s academic presence. Not only this, but professors and educators do not get the right recognition they need, which hinders their growth. With the PrepAI community, contributors may notice:

- Leading discussions on assessment best practices

- Sharing innovative approaches to assessment design

- Helping peers navigate AI-supported tools in evaluation

- Contributing templates or frameworks that (with permission) can be reused

Why does this Structure Matters in Modern Education?

The landscape of teaching and assessment is changing rapidly:

- Curricula are becoming more skill-based and outcome-focused.

- Learner populations are increasingly diverse.

- Academic integrity and fairness are under closer scrutiny.

- Technology is reshaping both learning and evaluation.

Traditional solo assessment design cannot keep up with these demands. Educators need

connection, feedback, and perspective, not just tools. By combining AI-based assessment tools with peer review through the PrepAI Community, educators can improve both efficiency and quality. AI helps with speed and variation; peer review ensures the work is meaningful, fair, and academically sound.

This balanced human judgment, supported by smart technology and shared insight, is what leads to truly effective assessments.

Join the PrepAI Community: https://community.prepai.io/

FAQ

What is peer review in assessment design?

Peer review in assessment design is the practice of educators collaboratively reviewing questions, rubrics, or assessment structures before they are given to students. It helps improve clarity, fairness, alignment with learning outcomes, and overall assessment quality. Unlike audits or inspections, peer review is meant to support educators, not evaluate them.

Why is peer review becoming important in modern education?

Modern education focuses on skill-based learning, outcome-oriented assessments, and diverse learner needs. Designing assessments alone often leads to uncertainty and rework. Peer review allows educators to discuss question design best practices, validate difficulty levels, and ensure assessments truly measure learning rather than memorization, making it a key educational best practice today.

What role do AI-based assessment tools play in question design?

AI-based assessment tools help educators draft questions faster, generate variations, align difficulty levels, and structure assessments efficiently. Tools like AI question generators for teachers reduce repetitive work so educators can focus on academic judgment. However, AI works best when combined with human review and peer discussion.